Mitigating the threat of data poisoning in LLM models: Techniques, risks, and preventive measures

Published March 08, 2024. 1 min read

In recent years, the advent of Large Language Models (LLMs) has brought about transformative advancements in natural language processing. However, this progress has not been without its share of challenges, and one particularly insidious threat looms large — data poisoning.In this blog post, we will delve into the intricacies of data poisoning, specifically focusing on its implications for LLMs, the methods employed to poison such models, and effective preventive measures.

Understanding data poisoning

Data poisoning involves manipulating training data to compromise the performance of machine learning models during training or deployment. In the context of LLMs, data poisoning is a sophisticated attack vector that seeks to introduce subtle, malicious alterations to the training dataset, to influence the model's behavior. The ultimate goal is to undermine the model's accuracy, and integrity, or even inject biases that align with the attacker's objectives.

Data poisoning attack example

To illustrate the potential impact of data poisoning, consider a scenario where a malicious actor seeks to manipulate sentiment analysis in a language model designed for product reviews. In a legitimate training dataset, positive and negative reviews contribute to a balanced learning experience. However, a nefarious actor strategically injects subtly biased positive reviews with negative sentiments, influencing the model's perception of positivity. As the model trains on this manipulated dataset, it unwittingly learns distorted associations, compromising its ability to accurately discern genuine sentiments. This intentional poisoning not only undermines the model's reliability but also showcases the insidious nature of data poisoning in shaping the behavior of Large Language Models.

LLMs' vulnerability to data poisoning

Large Language Models, like GPT-3, are highly susceptible to data poisoning due to their dependence on massive amounts of training data. These models learn intricate patterns and associations from diverse datasets, making them sensitive to slight alterations. Attackers exploit this vulnerability by injecting poisoned samples, causing the model to learn and generalize based on the manipulated information.

Techniques for poisoning LLM data

Gradient-based methods Attackers leverage the gradient information during training to perturb the model parameters selectively. By manipulating gradients, adversaries can guide the learning process toward biased or compromised outcomes.Adversarial examples Crafting adversarial examples for AI data poisoning involves introducing subtle modifications to input data to mislead the model. For LLMs, this could mean injecting sentences or paragraphs with carefully designed alterations that influence the model's predictions.Data injection attacks Introducing poisoned samples directly into the training dataset is a straightforward approach. Attackers strategically insert biased or misleading data points, impacting the model's decision boundaries and predictive capabilities.

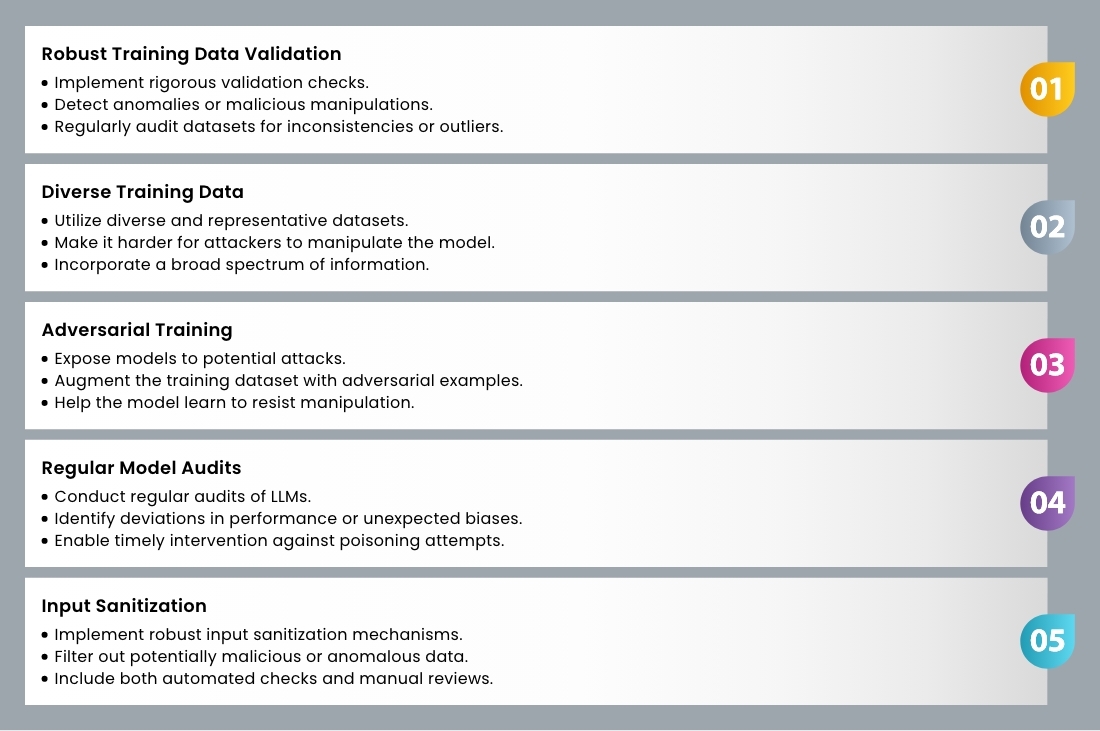

Data poisoning prevention strategies

Leveraging big data services for enhanced security

In the realm of defending against data poisoning in Large Language Models (LLMs), the role of big data services, coupled with meticulous data preparation, emerges as a potent defense mechanism. Big data services provide the infrastructure and tools necessary to process, analyze, and manage vast amounts of data, contributing significantly to the robustness of LLMs. Here, we explore how embracing big data services can fortify defenses against data poisoning:Anomaly detection with big data analytics Big data analytics platforms, such as Apache Spark and Hadoop, enable the detection of anomalies within large datasets. By employing sophisticated algorithms and statistical methods, these platforms can identify irregular patterns or outliers that may indicate potential data poisoning. Anomalies flagged during the data preparation phase can be scrutinized further, allowing for preemptive action before training the LLM.Scalable data validation Big data services provide scalable solutions for data validation, ensuring that datasets used for training LLMs are thoroughly inspected. By distributing validation processes across a large-scale infrastructure, big data services can efficiently handle vast amounts of data, making it feasible to validate datasets comprehensively. This scalability enhances the ability to identify and rectify any potential instances of poisoned data.Data diversity through big data integration The essence of safeguarding against machine learning poisoning lies in the diversity of training data. Big data services facilitate the integration of diverse datasets from various sources, enriching the training pool for LLMs. This diversity makes it more challenging for attackers to target specific patterns, as the model learns from a broad spectrum of information. Big data integration, when coupled with careful curation during the data preparation phase, contributes to a more resilient model.Real-time monitoring and analytics Big data services often include real-time monitoring and analytics capabilities. These features allow for continuous scrutiny of data streams, enabling swift identification of any unusual patterns or sudden deviations during LLM training. Real-time monitoring acts as a proactive defense mechanism, providing the agility to respond promptly to potential data poisoning attempts.Collaborative threat intelligence Big data platforms facilitate the aggregation and analysis of threat intelligence from diverse sources. By tapping into collaborative threat intelligence networks, organizations can stay informed about emerging patterns and tactics employed by attackers engaging in AI data poisoning. This collective awareness empowers defenders to anticipate potential data poisoning strategies and adapt their preventive measures accordingly.Efficient data cleansing with big data tools Big data tools, such as Apache Flink and Apache Kafka, offer efficient data cleansing capabilities. Prior to LLM training, data cleansing involves identifying and removing any corrupt or suspicious data points. By leveraging big data tools for this process, organizations can streamline the cleansing workflow, ensuring the integrity of the training dataset.

Conclusion

As we navigate the complex terrain of data poisoning threats to Large Language Models (LLMs), the integration of big data services emerges as a pivotal safeguard. From anomaly detection and scalable data validation to real-time monitoring and collaborative threat intelligence, these services offer a robust framework for fortifying LLMs against evolving risks. As organizations strive to ensure the reliability and resilience of natural language processing applications, the synergy between LLMs and big data services becomes indispensable. To bolster your defense against data poisoning, reach out today for advanced big data services that will elevate the security posture of your machine learning models, shaping a future where LLMs thrive securely.